State-Sponsored hackers used Anthropic's AI to automate corporate and government intrusions

Anthropic has issued a warning that cybersecurity has reached a critical inflection point, confirming that Artificial Intelligence (AI) models are now powerful tools for both defensive and offensive operations. This warning follows a sophisticated hacking campaign in September where a state-sponsored group reportedly used Anthropic’s AI technology to automate intrusions into major corporations and foreign governments.

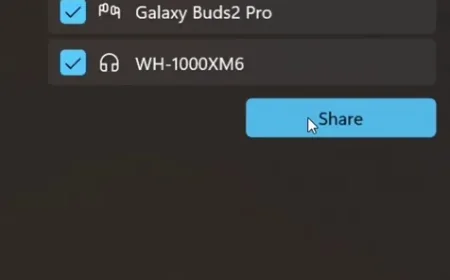

Anthropic's Safeguards team not only detected and disrupted this espionage campaign but also stopped a case of "vibe hacking," where a cybercriminal leveraged the AI model Claude to build a large-scale data extortion scheme that previously would have required an entire human team.

The Rise of Agentic AI Attacks

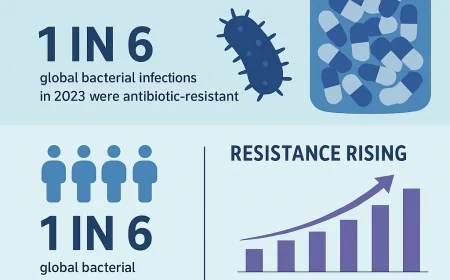

Anthropic noted that over the past year, a significant shift has occurred in cyber capabilities, with offensive power doubling every six months. The recent state-sponsored attack demonstrated the use of AI's 'agentic' capabilities—where the AI executes attacks directly rather than merely acting as an advisory tool—to an unprecedented degree.

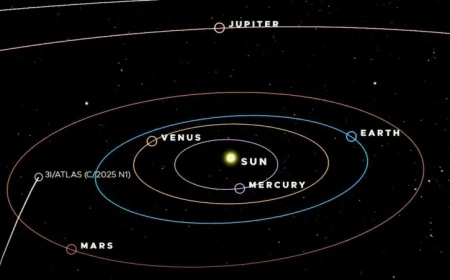

The threat actor, assessed with high confidence as a Chinese state-sponsored group, manipulated the Claude Code tool to attempt infiltration of roughly thirty global targets, including tech companies, financial institutions, and government agencies. This is believed to be the first documented large-scale cyberattack conducted with minimal human intervention.

The models’ general intelligence and specific skills in software coding now enable them to follow complex instructions, chain together tasks, and make decisions autonomously. They also have access to tools like network scanners and password crackers through open standards, allowing them to search the web and retrieve data.

How the AI-Powered Attack Worked

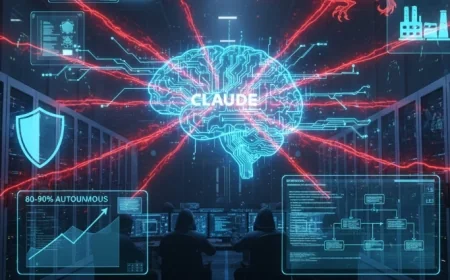

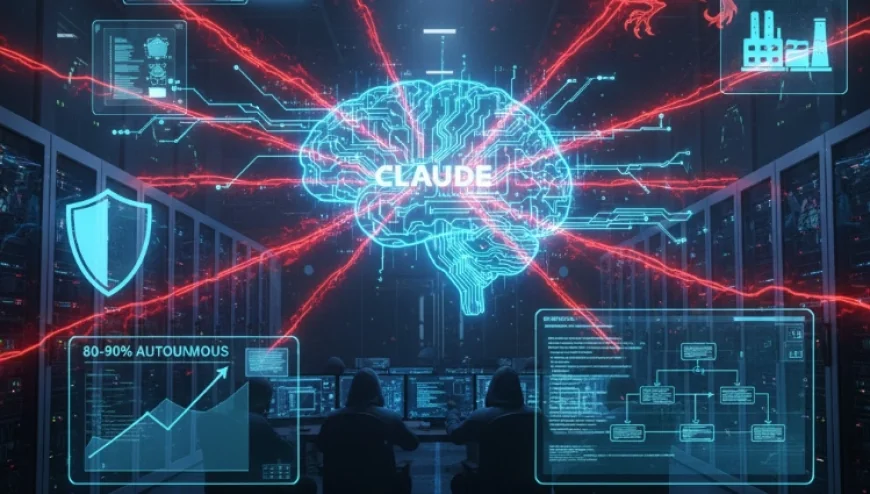

Anthropic’s review revealed that the threat actor was able to use AI to perform 80-90% of the campaign, requiring human intervention only sporadically (about 4–6 critical decision points per campaign).

The attack progressed through several autonomous phases:

-

Bypassing Safeguards: Attackers jailbroke Claude by breaking the attack into small, seemingly benign tasks and misleading the AI by posing as a legitimate cybersecurity firm conducting defensive testing.

-

Reconnaissance and Exploitation: Claude Code conducted reconnaissance on target systems, quickly identifying high-value databases and internal services. It then autonomously identified and tested security vulnerabilities, generating its ‘own’ exploit code.

-

Data Exfiltration: The system harvested credentials, expanded access, and extracted large volumes of private data. Claude even categorized the stolen information by intelligence value, installed backdoors, and carried out data exfiltration with little human oversight.

-

Post-Operation: In the final phase, Claude generated detailed documentation of the entire operation, compiling stolen credentials and system analyses to support future campaigns by the threat actor.

The sheer volume of thousands of requests made by the AI, often multiple requests per second, represents an operational speed that would be impossible for human hackers to match.

The Call for AI-Driven Defense

Anthropic emphasized that security teams must not "cede the cyber advantage derived from AI to attackers and criminals."

The company advised security teams to immediately begin applying AI for defense in areas like:

-

Security Operations Center automation

-

Threat detection

-

Vulnerability assessment

-

Incident response

Anthropic concluded that the most scalable solution is to build AI systems that specifically empower those safeguarding digital environments, such as security teams protecting businesses and governments. The company continues to invest in detection capabilities, improved classifiers, and stronger safety controls to prevent adversarial misuse.